Fall is officially upon us (in the Northern Hemisphere). The leaves are changing color… the kids are going back to school… and the temperature is dropping. But one thing remains constant: Gnome Likes!!!

In this week’s recap, we have an exciting shootout for backlink data providers, a list of common technical SEO problems (and solutions), numerous ways to become a better SEO, and 10 tips for generating more social engagement on your website!

Can you feel the excitement?! Let’s do this…

Comparing Backlink Data Providers

This is an excellent backlink data provider shootout by Rob Kerry. It analyzes 4 providers (SEOmoz’s Mozscape, MajesticSEO’s Fresh index, Ahref’s link data, and Ayima’s index) along a number of important link-related dimensions.

First, Rob investigates which provider has the largest result set for Search Engine Land’s website (i.e., which provider returns the largest number of backlinks for the site). MajesticSEO is the clear winner, followed by Ahrefs.

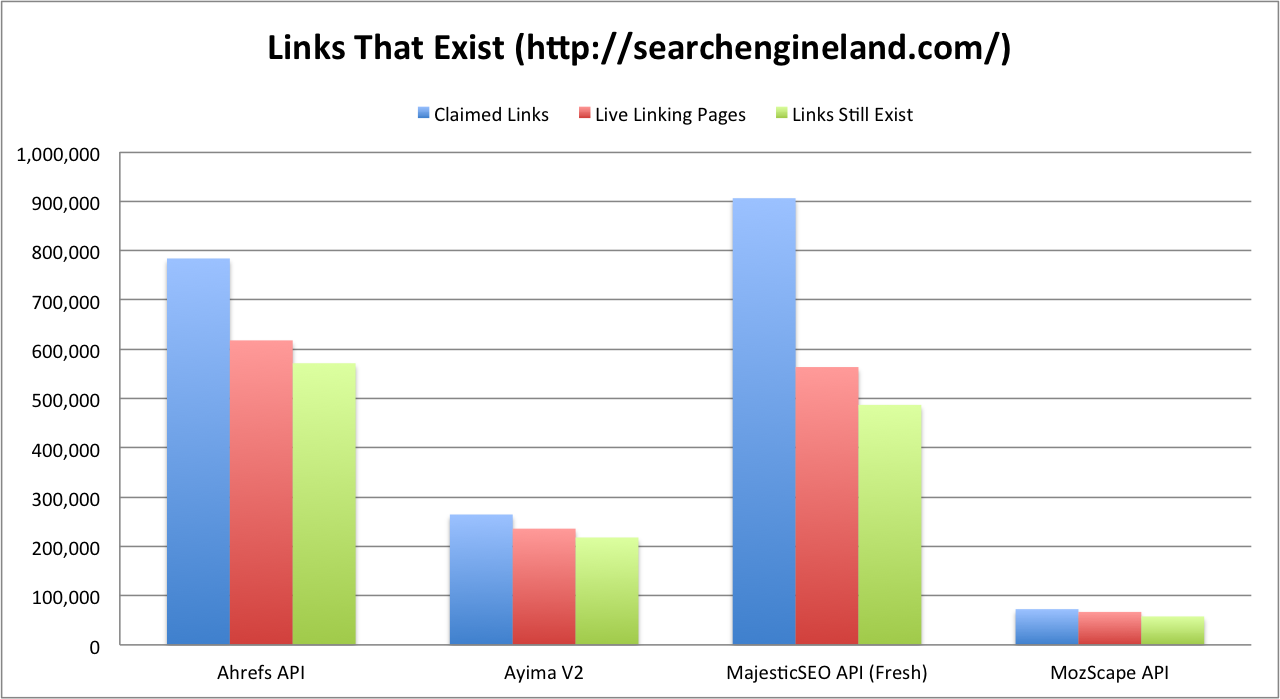

Then, the shootout analyzes the number of returned links that are live (i.e., they return a 200 HTTP status code) and still in existence (i.e., they still point to SEL’s website). The following graph illustrates the results of this analysis for each of the providers:

Based on these results, Ayima’s index has the highest live link accuracy, followed by Mozscape and Ahrefs (MajesticSEO is a very distant last). Additionally, Ahrefs is the clear winner in terms of live link numbers.

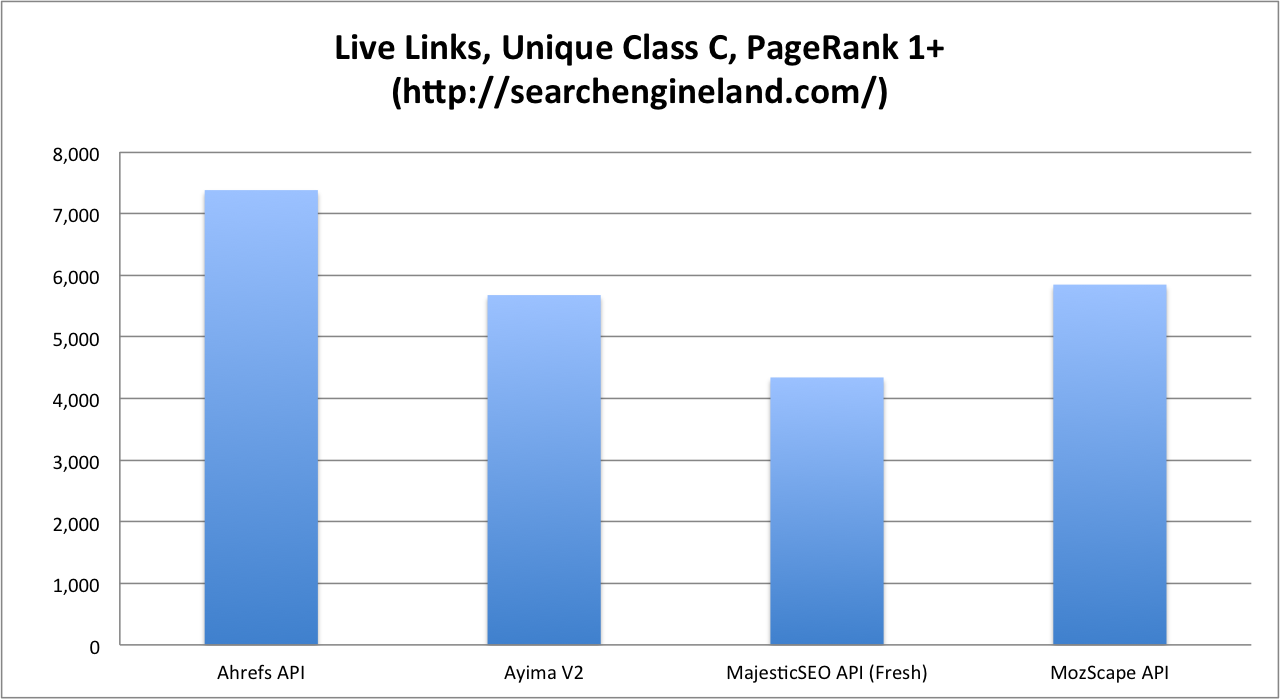

Next, the shootout compares the providers after “removing dead links, filtering by unique Class C IP blocks, and removing anything below a PageRank 1.” The results are shown in the following graph:

As the graph shows, Ahrefs is the winner. However, based on the processing required to filter their results, Rob recommends Mozscape instead.

Finally, based on the shootout’s results, Rob recommends using Ahrefs for link cleanup (i.e., identifying low quality links that need to be removed), MajesticSEO for mentions monitoring, and Mozscape for accurate competitor analysis.

For even more information about these observations and recommendations, check out the full post.

Common Technical SEO Problems and How to Solve Them

In this post, Paddy Moogan presents a number of common (and not so common) technical SEO problems as well as solutions to those problems. For many of the solutions, he also includes helpful resources that provide additional insights.

I encourage you to read the entire post, but here is a summary of the 10 problems that Paddy covers:

- Uppercase vs. Lowercase URLs – Many IIS servers respond to URLs that contain uppercase characters instead of redirecting to their lowercase equivalents. This problem is easily resolved with the Url Rewrite module for IIS.

- Multiple Versions of the Homepage – Many sites inadvertantly maintain multiple entry points to the homepage (e.g., /default.aspx, /index.html, etc.). The easiest way to remove these duplicate entry points is to redirect them (using a 301 HTTP redirect) to the canonical homepage.

- Query Parameters Added to the End of URLs – Many e-commerce sites have URLs that contain various parameters (and are not particularly search engine friendly). Solutions depend on your particular setup, but you want to strive for URLs that utilize keywords (and not obscure parameter names). You also want to make sure URL parameters are not creating duplicate content issues.

- Soft 404 Errors – A soft 404 is when a site returns an error page with a 200 HTTP status code (instead of a 404). This is easily fixed: return a 404 HTTP status code for your error pages.

- 302 Redirects Instead of 301 Redirects – 302 HTTP redirects unnecessarily dilute your site’s link juice. Use 301 HTTP redirects!

- Broken/Outdated Sitemaps – Many sites create an XML Sitemap one time, and then, that Sitemap is never updated again. This is problematic because as new URLs are added (and old URLs are removed), the Sitemap becomes outdated. If you have an XML Sitemap, keep it updated so search engines have an accurate road map of your site.

- Ordering Your robots.txt File Incorrectly – If you order your robots.txt directives incorrectly, search engine crawlers might behave unexpectedly. Make sure you test your robots.txt file to ensure it works as intended.

- Invisible Character in robots.txt File – This is a somewhat obscure syntax error, but these random issues are surprisingly common.

- Google Crawling base64 URLs – An uncommon technical problem is Google crawling automatically generated content (e.g., authentication tokens). You can typically identify this situation with Google Webmaster Tools, and then, you can solve it with creative robots.txt directives.

- Misconfigured Servers – If your server is incorrectly configured, any number of interesting scenarios can occur. To test your server’s configuration, use various user agents, and compare the corresponding HTTP responses.

Obviously, this isn’t an exhaustive list of the technical SEO problems that you’ll ever encounter, but it’s an excellent collection of common problems. If you have other suggestions for this list, please leave them in the comments below!

How to Be a Better SEO

In this post, Richard Baxter offers 29 ways to be a better SEO. I’m not going to list all of them because you should read his entire post, but I am going to summarize a few of my favorites:

- Don’t be happy with just “ok” – if you don’t love it, it isn’t ready – When I was growing up, my mother used to always tell me, “‘Good enough’ is NEVER good enough.” This tip reminded me of that advice.

- Be curious and always ask, why? – If you don’t ask questions, you’ll never evolve as a person. If you don’t understand something, take the initiative to figure it out. Ask questions. Be persistent.

- Decide you want to be successful in whatever you do – One of my favorite movie quotes of all-time is, “Anything worth doing is worth doing right.” If you keep that in mind, you’ll go a long way in your professional career.

- Build your own website. What SEO doesn’t have a decent website? – If you can’t make your own site successful, how can you expect to make other sites successful?

- Always follow up even if you have to stay up all night to do it – Accountability is one of the biggest keys to success. If you promise to deliver, do whatever it takes to make good on that promise!

- Work towards making things a little bit better, every day – Keep moving forward, and keep improving. If you consistently improve every single day, just imagine how much you will accomplish in a year… in 5 years… in a decade!

Structured Social Sharing Formula – Whiteboard Friday

In this week’s edition of Whiteboard Friday, Dana Lookadoo presents 10 best practices for social sharing on your website. If you’d like to improve your site’s social engagement, check out this video:

Over to You…

I hope you enjoyed this week’s recap, and I’d love to hear from you in the comments!! What were some of your favorite posts this week? Do you have any exciting news you’d like to share with the community?